- Joined

- May 15, 2016

- Messages

- 4,022

- Likes

- 2,574

- Points

- 1,730

As the Positive Technologies study has shown, cybercriminals have begun to actively implement artificial intelligence (AI) in their activities. In the very near future, cybercriminals will be able to find application for AI in all tactics from the MITRE ATT&CK database, as well as in 59% of its techniques.

As the authors of the study note, until recently, attackers did not use AI very actively: it was used in only 5% of MITRE ATT&CK techniques and for another 17%, the use of such tools was recognized as promising.

Everything changed with the emergence of large language models (LLM) and tools like ChatGPT, which are legal and publicly available. After the release of ChatGPT 4, the number of phishing attacks increased 13 times in a year.

As analysts especially noted, the popularity of AI tools among cybercriminals is also facilitated by the fact that LLMs have no restrictions that would prevent them from generating malicious code or instructions. As a result, such tools are quite widely used to create various software malware.

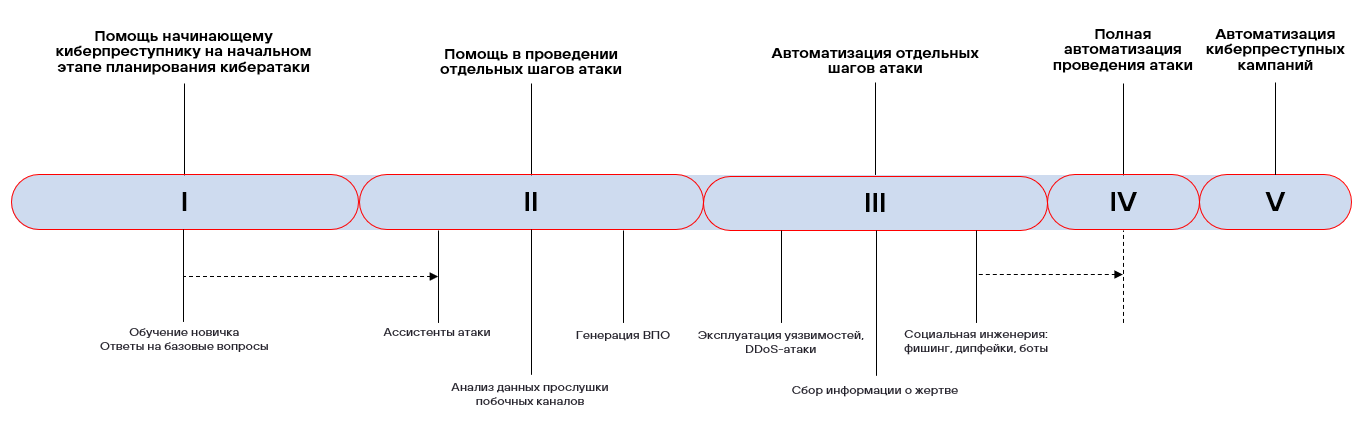

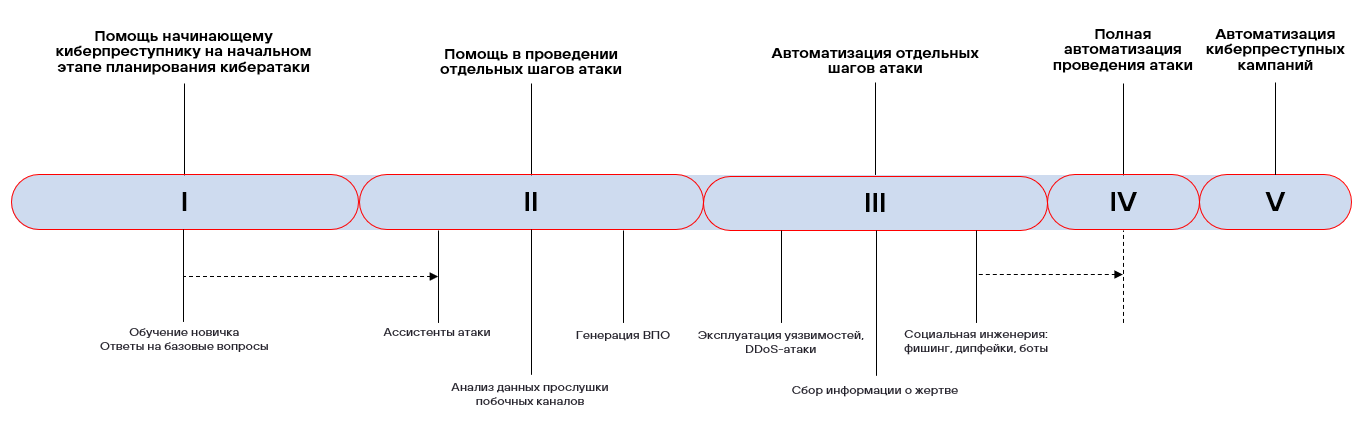

Access to large language models helps novice cybercriminals speed up their preparation for attacks. They can help an attacker clarify whether they missed something or study different approaches to implementing certain steps during a particular campaign.

Advanced search tools will help a novice attacker find the necessary information and find answers to basic questions. The authors of the study especially pay attention to the situation in developing countries, where companies and government agencies are less protected.

Among the attack methods where inexperienced attackers use AI most widely, the authors of the study highlighted phishing, social engineering, attacks on web applications and weak passwords, SQL injections, and network sniffing. They do not require deep technical knowledge and are easy to implement using publicly available tools.

Thanks to AI, even at the current level of technology, it is possible to automatically generate fragments of malicious code, phishing messages, various deepfakes that make the usual scenarios of social engineering attacks more convincing, automate individual stages of cyberattacks, among which the authors of the study particularly highlighted botnet management. However, only experienced attackers can develop and create new AI tools for automating and scaling cyberattacks.

If attackers manage to automate attacks on a selected target, the next step may be to use tools for independent target search. For experienced cybercriminals, AI will provide tools for collecting data on potential victims from various sources, and in a short time.

AI is actively used to exploit vulnerabilities, and the potential of these tools has not yet been fully realized. AI helps to create bots that imitate human behavior with a high degree of accuracy. Deepfakes are also actively used in attacks, which have already reached a fairly high level of plausibility. They are used in attacks on both ordinary people and companies.

As the authors of the study note, until recently, attackers did not use AI very actively: it was used in only 5% of MITRE ATT&CK techniques and for another 17%, the use of such tools was recognized as promising.

Everything changed with the emergence of large language models (LLM) and tools like ChatGPT, which are legal and publicly available. After the release of ChatGPT 4, the number of phishing attacks increased 13 times in a year.

As analysts especially noted, the popularity of AI tools among cybercriminals is also facilitated by the fact that LLMs have no restrictions that would prevent them from generating malicious code or instructions. As a result, such tools are quite widely used to create various software malware.

Access to large language models helps novice cybercriminals speed up their preparation for attacks. They can help an attacker clarify whether they missed something or study different approaches to implementing certain steps during a particular campaign.

Advanced search tools will help a novice attacker find the necessary information and find answers to basic questions. The authors of the study especially pay attention to the situation in developing countries, where companies and government agencies are less protected.

Among the attack methods where inexperienced attackers use AI most widely, the authors of the study highlighted phishing, social engineering, attacks on web applications and weak passwords, SQL injections, and network sniffing. They do not require deep technical knowledge and are easy to implement using publicly available tools.

Thanks to AI, even at the current level of technology, it is possible to automatically generate fragments of malicious code, phishing messages, various deepfakes that make the usual scenarios of social engineering attacks more convincing, automate individual stages of cyberattacks, among which the authors of the study particularly highlighted botnet management. However, only experienced attackers can develop and create new AI tools for automating and scaling cyberattacks.

| "No attack can yet be said to have been carried out entirely by artificial intelligence. However, the world of information security is gradually moving towards autopiloting both in defense and in attack. We predict that over time, cybercriminal tools and modules with AI will be combined into clusters to automate more and more stages of the attack until they can cover most of the steps," the authors of the study warn. |

If attackers manage to automate attacks on a selected target, the next step may be to use tools for independent target search. For experienced cybercriminals, AI will provide tools for collecting data on potential victims from various sources, and in a short time.

AI is actively used to exploit vulnerabilities, and the potential of these tools has not yet been fully realized. AI helps to create bots that imitate human behavior with a high degree of accuracy. Deepfakes are also actively used in attacks, which have already reached a fairly high level of plausibility. They are used in attacks on both ordinary people and companies.

| "The high potential of artificial intelligence in cyberattacks is not a reason to panic," comments Roman Reznikov, an analyst at the research group of the Positive Technologies analytics department. "We need to look realistically into the future, study the capabilities of new technologies and systematically engage in ensuring effective cybersecurity. A logical countermeasure to attacking AI is a more effective AI in defense, which will help overcome the shortage of specialists to protect against cyberattacks through the automation of many processes." |